How it works

The Aptean BI Data Lake Connector streamlines data export from Dynamics 365 Business Central to:

- Azure Data Lake

- Microsoft Fabric

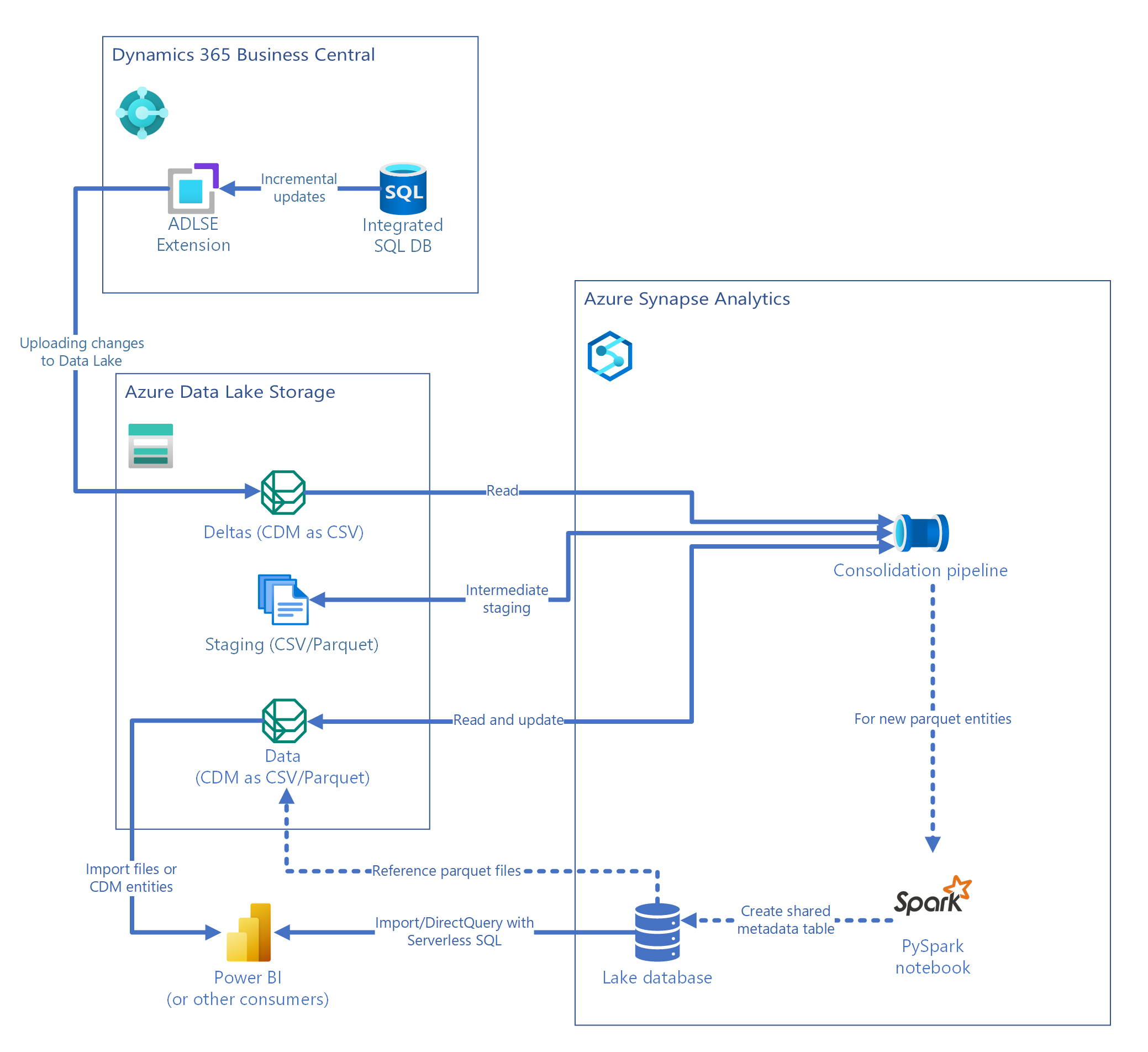

Export data to Azure Data Lake

Azure Data Lake is a scalable storage service for big data analytics, designed to store and analyze large volumes of structured, semi-structured, and unstructured data. It exports data from Dynamics 365 Business Central in the Common Data Model (CDM) format. This data is processed using Azure Synapse Analytics for scalable analysis, enabling businesses to make data-driven decisions and optimize performance.

For example, by exporting daily updates from Dynamics 365 Business Central to Azure Data Lake, a retail company centralizes its data for advanced analysis. This allows the data science team to optimize inventory and forecast demand using Azure Synapse, while leadership uses Power BI for data-driven decisions, enhancing overall business efficiency and performance.

Exporting data from Dynamics 365 Business Central to Azure Data Lake involves two key components:

-

The Azure Data Lake Storage Export (ADLSE) extension in Business Central enables exporting incremental data updates to Azure Data Lake. The data is stored in a CDM folder format, with a deltas.cdm.manifest.json file that defines the structure of the incremental updates.

-

The Azure Synapse pipeline processes the exported data and consolidates incremental updates into a final CDM data folder for analysis.

Data flow overview

The following steps explain how data moves from Business Central to Azure Data Lake and is processed for analytics.

-

Incremental data updates from Business Central are sent to Azure Data Lake Storage via the ADLSE extension and stored in the deltas folder.

-

The Azure Synapse pipeline detects new incremental updates and merges them into the data folder.

-

The resulting data can be consumed by applications, such as Power BI, in the following ways:

- CDM: via the data.cdm.manifest.json manifest

- CSV/Parquet: via the underlying files for each individual entity inside the data folder

- Spark/SQL: shared metadata tables

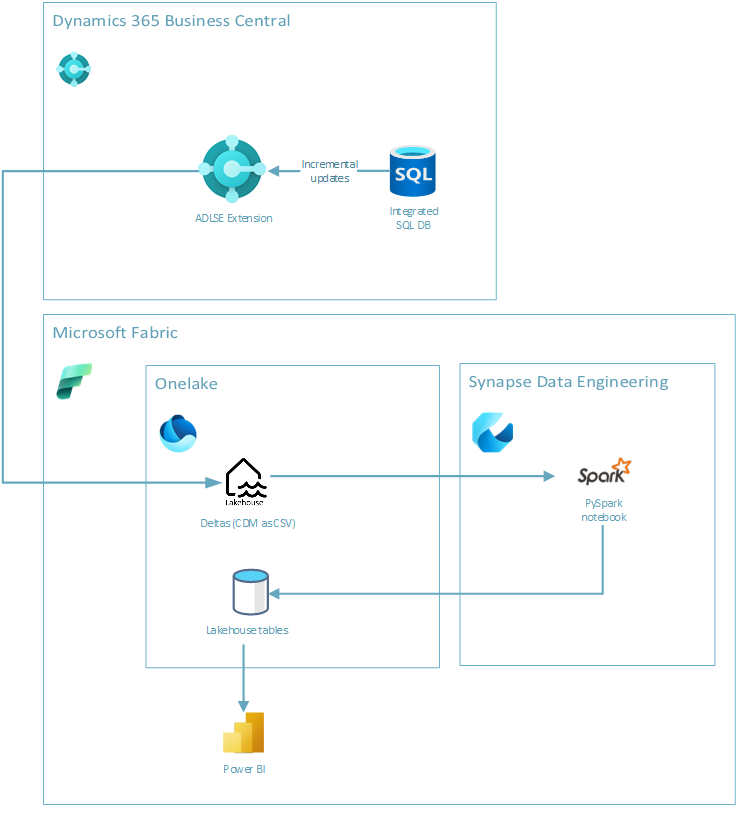

Export data to Microsoft Fabric

Microsoft Fabric is an integrated data platform designed to simplify data management, analytics, and business intelligence. This format efficiently stores versions, manages large-scale data, supports ACID transactions, enables incremental data processing and time-travel queries. It exports data from Dynamics 365 Business Central in the Delta Lake format. Data is typically stored as Delta Parquet files within the Lakehouse.

For example, a manufacturing company uses Business Central to manage production, orders, and supply chain. By exporting data to Microsoft Fabric, the company analyzes production efficiency, machine downtimes, and supply chain bottlenecks in real-time, identifying inefficiencies, predicting maintenance, and reducing costs. Power BI provides real-time insights for quick, data-driven decisions. Microsoft Fabric offers real-time processing, analytics, and visualization for fast, interactive data integration and analysis.

Exporting data from Dynamics 365 Business Central to Microsoft Fabric involves two key components:

- A Business Central extension called Azure Data Lake Storage Export, which enables the export of incremental data updates to a container in the Data Lake. The data increments are stored in the Lakehouse in .csv format.

- A template for creating a notebook, which moves the delta files to a delta parquet table within the Lakehouse.

Data flow overview

The following steps explain how data moves from Business Central to Microsoft Fabric and is processed for analytics.

- Incremental update data from Business Central is moved to Microsoft Fabric through the ADLSE extension into the deltas folder in the Lakehouse.

- Triggering the notebook consolidates the increments into the delta parqeut tables.

- The resulting data can be consumed by Power BI or other tools inside Microsoft Fabric.